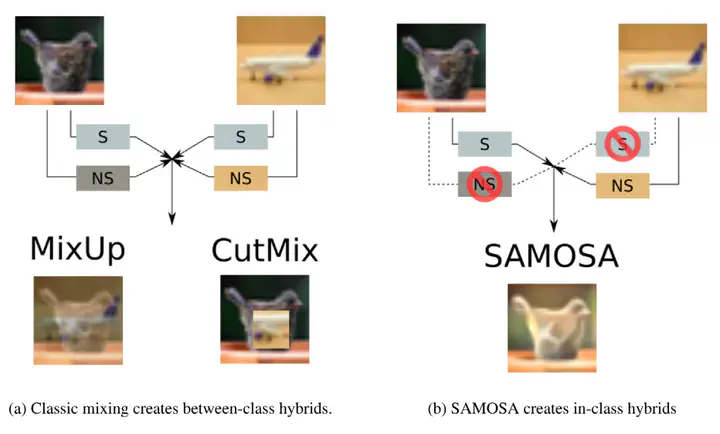

SAMOSA learns to separate semantic and non-semantic contents from different images and then recombine them.

SAMOSA learns to separate semantic and non-semantic contents from different images and then recombine them.Abstract

Leveraging unlabeled examples is a crucial issue for boosting performances in semi-supervised learning. In this work, we introduce the SAMOSA framework based on semantic augmentation for mixing semantic components from labeled examples and non semantic characteristics from unlabeled data. Our approach is based on a novel reconstruction module that can be grafted onto most state of the art networks. The proposed approach leans on two main aspects, an architectural component optimized to disentangle semantic and auxiliary non semantic representations using an unsupervised loss, and a semantic augmentation scheme that leverages this disentangling module to generate artificially labeled examples preserving known class information while controlling auxiliary variations. We demonstrate the ability of our method to improve the performance of models trained according to standard semi-supervised procedures Mean Teacher (Tarvainen and Valpola, 2017) MixMatch (Berthelot et al., 2019) and FixMatch (Sohn et al., 2020).