Abstract

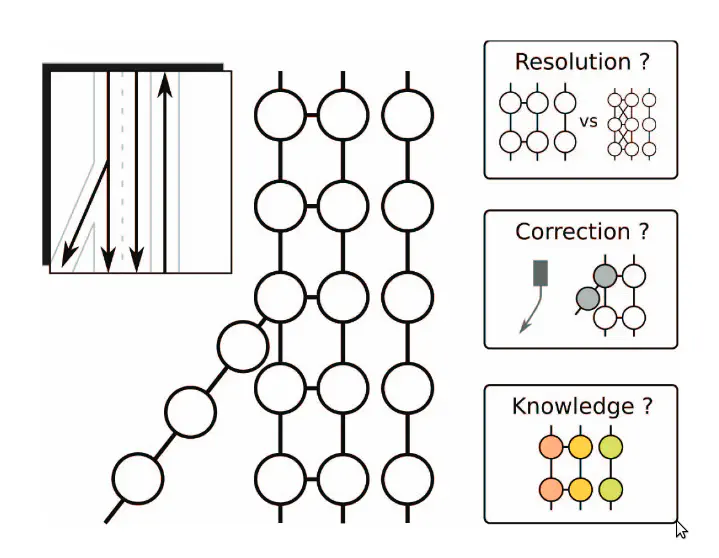

As Deep Learning tackles complex tasks like trajectory forecasting in autonomous vehicles, a number of new challenges emerge. In particular, autonomous driving requires accounting for vast a priori knowledge in the form of HDMaps. Graph representations have emerged as the most convenient representation for this complex information. Nevertheless, little work has gone into studying how this road graph should be constructed and how it influences forecasting solutions. In this paper, we explore the impact of spatial resolution, the graph’s relation to trajectory outputs and how knowledge can be embedded into the graph. To this end, we propose thorough experiments for 2 graph-based frameworks (PGP, LAformer) over the nuScenes dataset, with additional experiments on the LaneGCN framework and Argoverse 1.1 dataset.

The work in this paper was extended in a preprint: “Reconciling feature sharing and multiple predictions with MIMO Vision Transformers”